AI labs are turning to crowdsourced platforms like Chatbot Arena to test their latest models, but some experts question the ethics and validity of this approach.

Emily Bender, a linguistics professor, criticizes Chatbot Arena for its flawed benchmarking process, which relies on user voting to evaluate models.

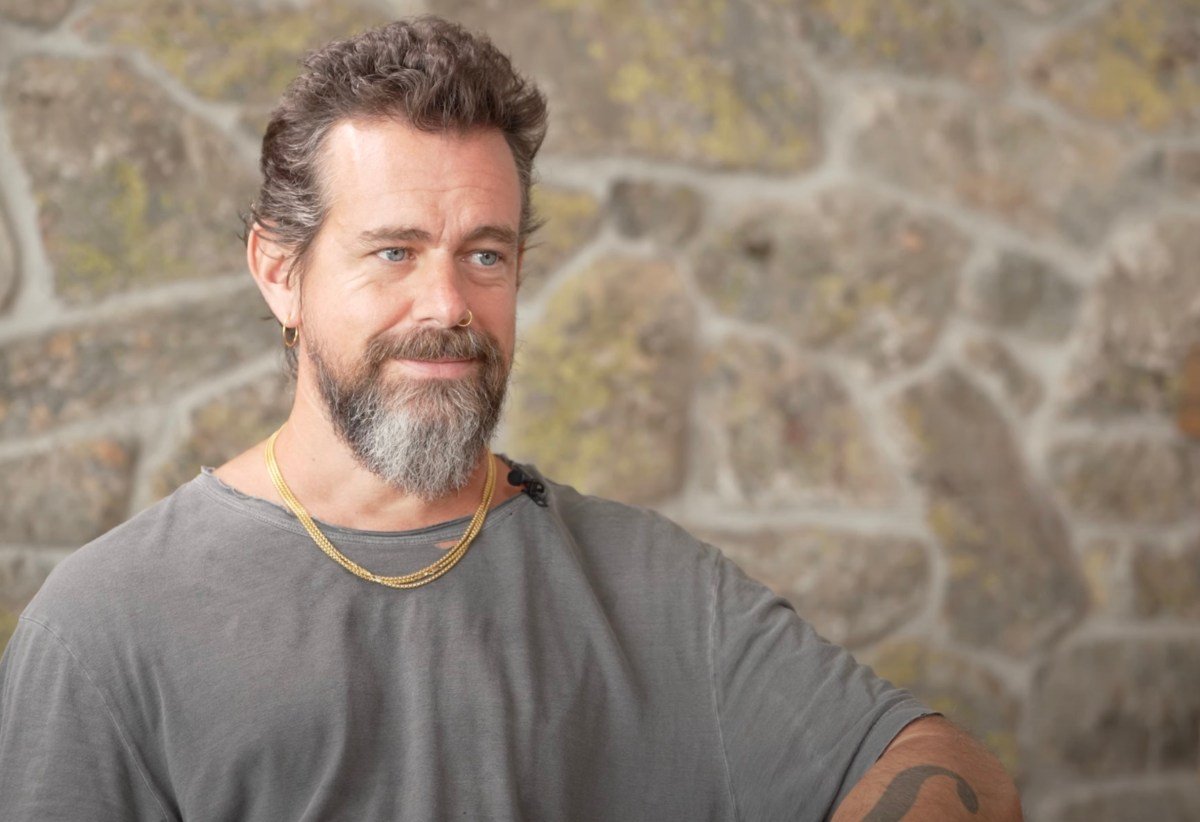

Asmelash Teka Hadgu believes that benchmarks like Chatbot Arena are being manipulated by AI labs to make exaggerated claims, citing Meta’s controversial handling of the Llama 4 Maverick model.

Hadgu and Kristine Gloria argue that model evaluators should be compensated for their work, drawing parallels to exploitative practices in the data labeling industry.

Tech and VC heavyweights are joining the Disrupt 2025 agenda to discuss the evolving landscape of AI benchmarking and evaluation processes.

Matt Fredrikson of Gray Swan AI emphasizes the importance of internal benchmarks and paid private evaluations in addition to public crowdsourced benchmarks.

Alex Atallah of OpenRouter and Wei-Lin Chiang of LMArena stress the need for diverse testing methods beyond open benchmarking to ensure accurate model evaluations.

Chiang explains that Chatbot Arena has updated its policies to prevent discrepancies and reinforce fair evaluations, aiming to provide an open and transparent space for community engagement with AI.