On Saturday, Triplegangers CEO Oleksandr Tomchuk was alerted that his company’s e-commerce site was down. It looked to be some kind of distributed denial-of-service attack. He soon discovered the culprit was a bot from OpenAI that was relentlessly attempting to scrape his entire, enormous site.

OpenAI was sending “tens of thousands” of server requests trying to download all of it, hundreds of thousands of photos, along with their detailed descriptions. “Their crawlers were crushing our site,” he said “It was basically a DDoS attack.”

Tech and VC heavyweights join the Disrupt 2025 agenda

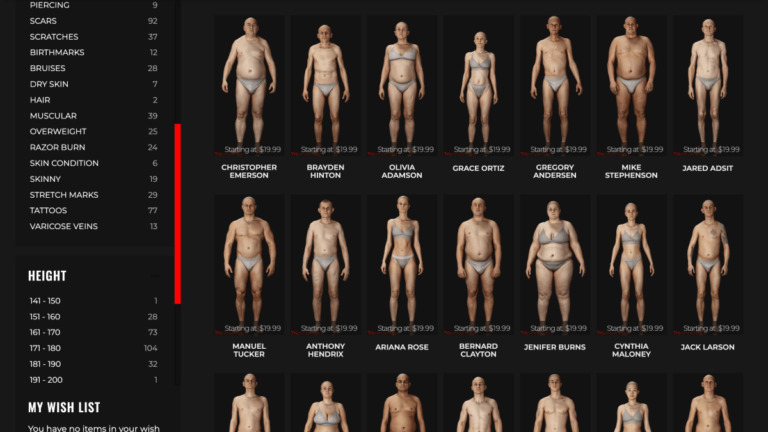

Triplegangers’ website is its business. The seven-employee company has spent over a decade assembling what it calls the largest database of “human digital doubles” on the web, meaning 3D image files scanned from actual human models. It sells the 3D object files, as well as photos — everything from hands to hair, skin, and full bodies — to 3D artists, video game makers, anyone who needs to digitally recreate authentic human characteristics.

Can’t know for certain what was taken

By Wednesday, after days of OpenAI’s bot returning, Triplegangers had a properly configured robot.txt file in place, and also a Cloudflare account set up to block its GPTBot and several other bots he discovered. Tomchuk is also hopeful he’s blocked crawlers from other AI model companies. On Thursday morning, the site didn’t crash, he said.

But Tomchuk still has no reasonable way to find out exactly what OpenAI successfully took or to get that material removed. He’s found no way to contact OpenAI and ask. OpenAI did not respond to TechCrunch’s request for comment. And OpenAI has so far failed to deliver its long-promised opt-out tool.

If you own a small online business, beware of AI bots that could be stealing your copyrighted content without you even knowing. Many website owners have reported incidents where AI bots crashed their sites and caused unexpected costs, like running up their AWS bills.

AI bots on the rise

In 2024, the issue escalated even further, with a significant increase in “general invalid traffic” caused by AI crawlers and scrapers. This type of traffic doesn’t come from real users, highlighting the growing problem of AI bots infiltrating websites.

Protecting your website

To combat these AI bots, website owners like Tomchuk recommend actively monitoring log activity to detect any suspicious behavior. It’s essential to stay vigilant and take measures to protect your online content from being scraped without permission.

In a way, dealing with AI bots can feel like a mafia shakedown, where they will take what they want unless you have the right defenses in place. Instead of scraping data without consent, these bots should be asking for permission to use copyrighted materials.

Stay informed about the latest developments in AI with TechCrunch’s AI-focused newsletter! Sign up here to receive it every Wednesday in your inbox.